The paper presents several extensions to skip-gram model which is an efficient method for learning high-quality distributed vector representation that capture large number of precise syntactic and semantic word relationship, these extensions improve both the quality of the vectors and the training speed. By subsampling of the frequent words it obtains significant speedup and also learn more regular word representations. The paper also describes a simple alternative to the hierarchical softmax called negative sampling.

Distributed representation of words in a vector space help learning algorithms to achieve better performance in natural language processing tasks by grouping similar words.

Skip-gram Model

Skip-gram model is an efficient method for learning high quality vector representations of words from large amount of unstructured text data. The word representation computed using neural networks are very interesting because the learned vectors explicitly encode many linguistic regularities and patterns. For example, the result of a vector calculation vec(“Madrid”) - vec(“Spain”) + vec(“France”) is closer to vec(“Paris”).

Subsampling of frequent words during training results in a speedup of around 2x - 10x and improves accuracy of the representation of less frequent words.

A simplified version of Noise Contrastive Estimation(NCE) can be used for training the Skip-gram model.

The extension from word based to phrase based models is relatively simple. First identify a large number of phrases using a data-driven approach, and then treat the phrases as individual tokens during the training. Simple vector addition can often produce meaningful results. For example, vec(“Germany”) + vec(“Capital”) is close to vec(“Berlin”).

The training objective of the Skip-gram model is to find word representation that are useful for predicting the surrounding words in a sentence or a document. Given a sequence of training words, the objective is to maximize the average log probability.

Hierarchical Softmax

The hierarchical softmax uses a binary tree representation of the output layer with the W words as its leaves and, for each node, explicitly represents the ratline probabilities of its child nodes. These define a random walk the assigns probabilities to words. The hierarchical softmax has one representation for each word and one representation for every inner node of the binary tree. The main advantage is that instead of evaluating W output nodes in the neural network to obtain the probability distribution, it is needed to evaluate only about log₂(W) nodes. In phrase Skip-gram model Hierarchical Softmax achieved lower performance when trained without subsampling. The best representations of phrases are learned by model with the hierarchical softmax and subsampling.

Negative Sampling

An alternative to the hierarchical softmax is Noise Contrastive Estimation (NCE). NCE posits that a good model should be able to differentiate data from noise by means of logistic regression. NCE can be shown to approximately maximize the log probability of the softmax. The task is to distinguish the target word from draws from the noise distribution using logistic regression, where there are k negative samples for each data sample. The values of k in range 5-20 are useful for small training datasets while for large datasets the k can be as small as 2-5. NCE needs both samples and the numerical probabilities of the noise distribution. It has noise distribution as free parameter.

Subsampling

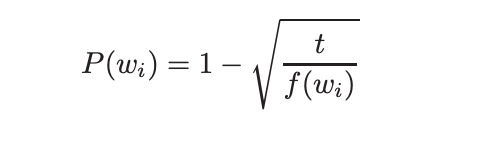

To counter the imbalance between the rare and frequent words a simple subsampling approach was used: each word wᵢin the training set is discarded with the probability computed by the formula

Where f(wᵢ) is the frequency of word wᵢ and t is a chosen threshold, typically around 10⁻⁵. This subsampling formula aggressively subsamples words whose frequency is greater that t while preserving the ranking of the frequencies.

Results

For training the Skip-gram model, a large dataset consisting of various news article (an internal Google dataset with one billion words) was used. All words the occurred less than 5 times in the training data were discarded, which resulted in a vocabulary of size 692k. Negative sampling outperforms the Hierarchical Softmax on the analogical reasoning task, and has even slightly better performance than the Noise Contrastive Estimation.

Learning Phrases

To learn vector representation for phrases, first find words that appear frequently together, and infrequently in other context. For example, “New York Times” and “Toronto Maple Leafs” are replaced by unique tokens in the training data, while a bigram “this is” will remain unchanged. A simple data driven approach was used where phrases are formed based on the unigram and bigram counts. The quality of the phrases representations were evaluated using a new analogical reasoning task that involves phrases.

Takeaways:

- Distributed representations of words and phrases with the Skip-gram model exhibit linear structure that makes precise analogical reasoning possible.

- Computationally efficient model architecture

- Improvement in the quality of the learned word and phrases and representations

- Subsampling of frequent words results in both faster training and significantly better representation of uncommon words

- Negative sampling algorithm is an extremely simple training method that learns accurate representations especially for frequent words.

- Word vectors can be somewhat meaningfully combined using just simple vector addition

- Another approach for learning representations of phrases is to simply represent the phrases with a single token.

Disclosure

Most of the things are directly from the paper. This post is meant to be a one place for all the papers that I read and take notes. You can read the paper in its entirety here.