Natural language conversation is one of the most challenging artificial intelligence problems, which involves language understanding, reasoning, and the utilization of common sense knowledge. The task at hand, referred to as Short-Text Conversation (STC), only considers one round of conversation, in which each round is formed by two short texts, with the former being an input (referred to as post) from a user and the latter a response given by the computer.

Overview

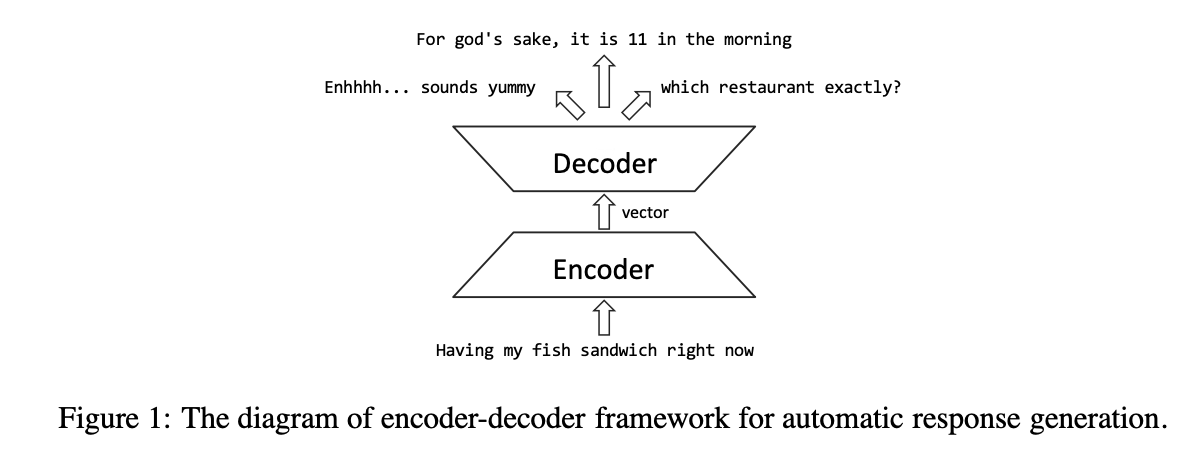

The paper take a probabilistic model to address the response generation problem, and propose employing a neural encoder-decoder for this task, named Neural Responding Machine(NRM). The neural encoder-decoder model, as illustrated in Figure 1, first summarizes the post as a vector representation, then feeds this representation to decoder to generate responses. It further generalize this scheme to allow the post representation dynamically change during the generation process, following the idea in (Bahdanau et al., 2014) originally proposed for neural-network-based machine translation with automatic alignment. NRM essentially estimates the likelihood of a response given a post.

Neural Responding Machine for STC

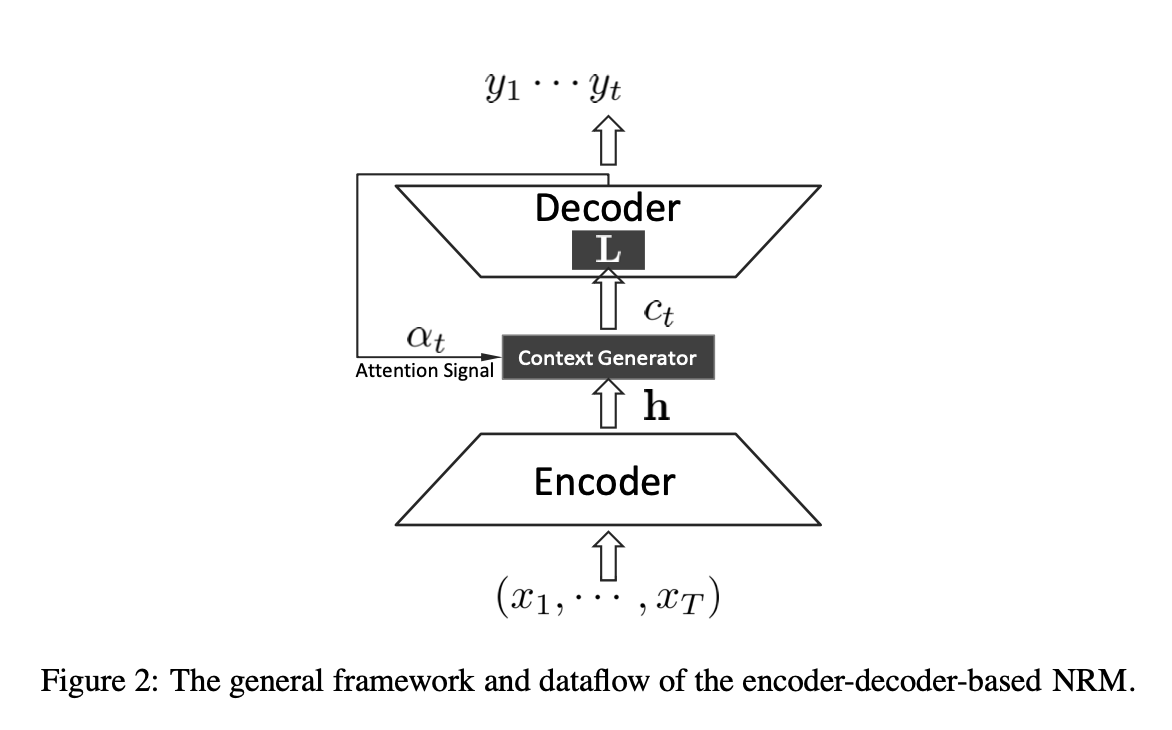

The basic idea of NRM is to build a hidden representation of a post, and then generate the response based on it, as shown in Figure 2. In the particular illustration, the encoder converts the input sequence x = (x1, · · · , xT ) into a set of high-dimensional hidden representations h = (h1, · · · , hT ), which, along with the attention signal at time t (denoted as αt), are fed to the context-generator to build the context input to decoder at time t (denoted as ct). Then ct is linearly transformed by a matrix L (as part of the decoder) into a stimulus of generating RNN to produce the t-th word of response (denoted as yt).

In NRM, L plays a more difficult role: it needs to transform the representation of post (or some part of it) to the rich representation of many plausible responses. The role of attention signal is to determine which part of the hidden representation h should be emphasized during the generation process. We use Recurrent Neural Network (RNN) for both encoder and decoder, for its natural ability to summarize and generate word sequence of arbitrary lengths.

The Computation in Decoder

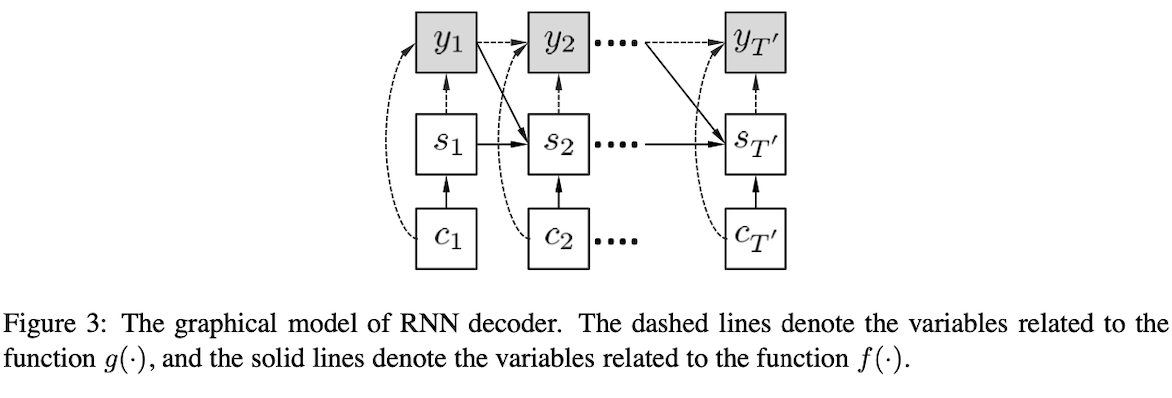

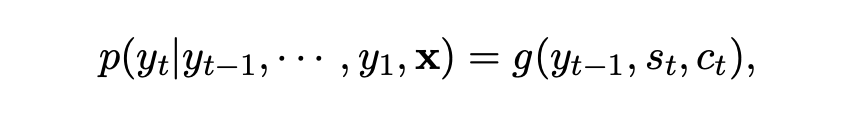

Figure 3 gives the graphical model of the decoder, which is essentially a standard RNN language model except conditioned on the context input c. The generation probability of the t-th word is calculated by

where yt is a one-hot word representation, g(·) is a softmax activation function, and st is the hidden state of decoder at time t calculated by

and f(·) is a non-linear activation function and the transformation L is often assigned as parameters of f(·). Here f(·) can be a logistic function, the sophisticated long short-term memory (LSTM) unit, or the gated recurrent unit (GRU).

The Computation in Encoder

We consider three types of encoding schemes, namely 1) the global scheme, 2) the local scheme, and the hybrid scheme which combines 1) and 2).

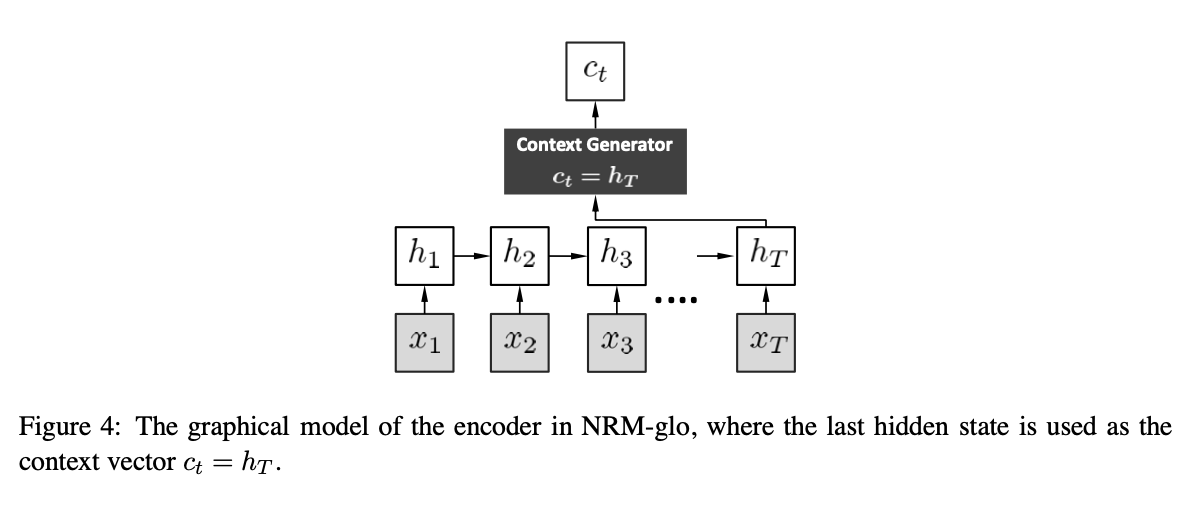

Global Scheme: Figure 4 shows the graphical model of the RNN-encoder and related context generator for a global encoding scheme. The hidden state at time t is calculated by ht = f(xt,ht−1) , and with a trivial context generation operation, it essentially uses the final hidden state hT as the global representation of the sentence. A NRM with this global encoding scheme is referred to as NRM-glo.

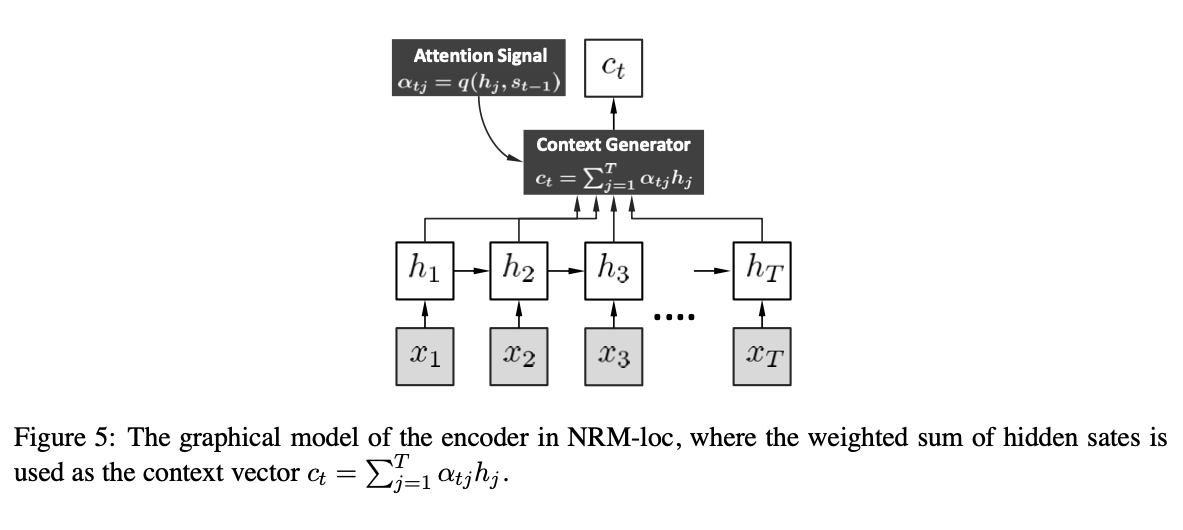

Local Scheme: the attention mechanism ⍺tj models the alignment between the inputs around position j and the output at position t, so it can be viewed as a local matching model. This local scheme is devised in (Bahdanau et al., 2014) for automatic alignment between the source sentence and the partial target sentence in machine translation. This scheme enjoys the advantage of adaptively focus- ing on some important words of the input text according to the generated words of response. A NRM with this local encoding scheme is referred to as NRM-loc.

Extensions: Local and Global Model

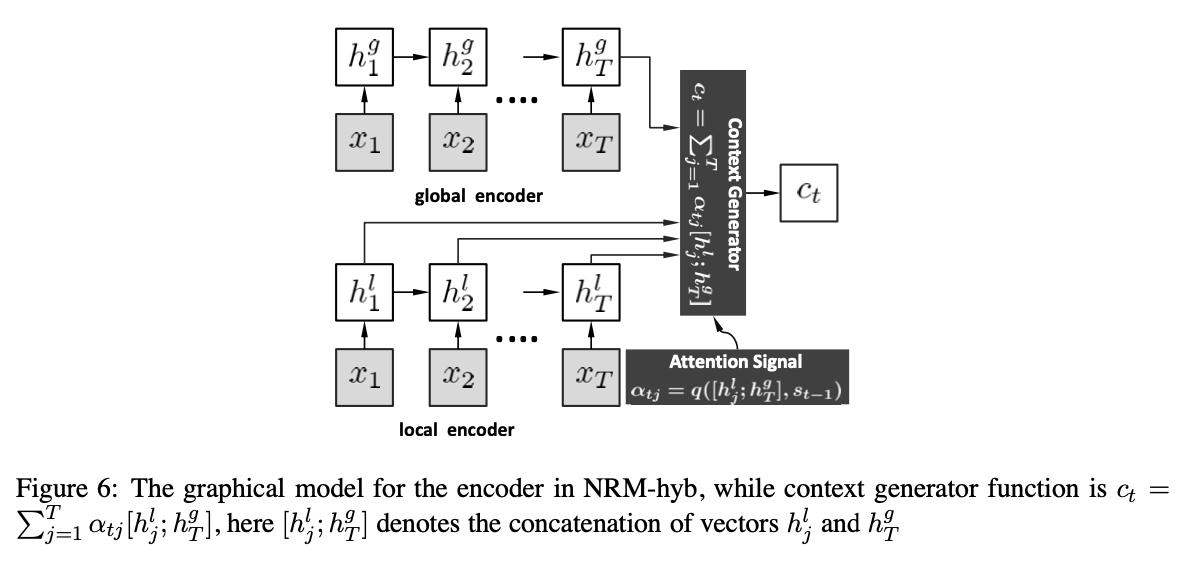

The global representation in NRM-glo may provide useful context for extracting the local context, therefore complementary to the scheme in NRM-loc. It is therefore a natural extension to combine the two models by concatenating their encoded hidden states to form an extended hidden representation for each time stamp, as illustrated in Figure 6. We can see the summarization hgT is incorporated into ct and ⍺tj to provide a global context for local matching. With this hybrid method, we hope both the local and global information can be introduced into the generation of response. The model with this context generation mechanism is denoted as NRM-hyb. First we initialize NRM-hyb with the parameters of NRM-loc and NRM-glo train separately, then fine tune the parameters in encoder along with training the parameters of decoder.

Disclosure

Most of the things are directly from the paper. This post is meant to be a one place for all the papers that I read and take notes. You can read the paper in its entirety here.